Why do we expect aliens to be more altruistic than we are?

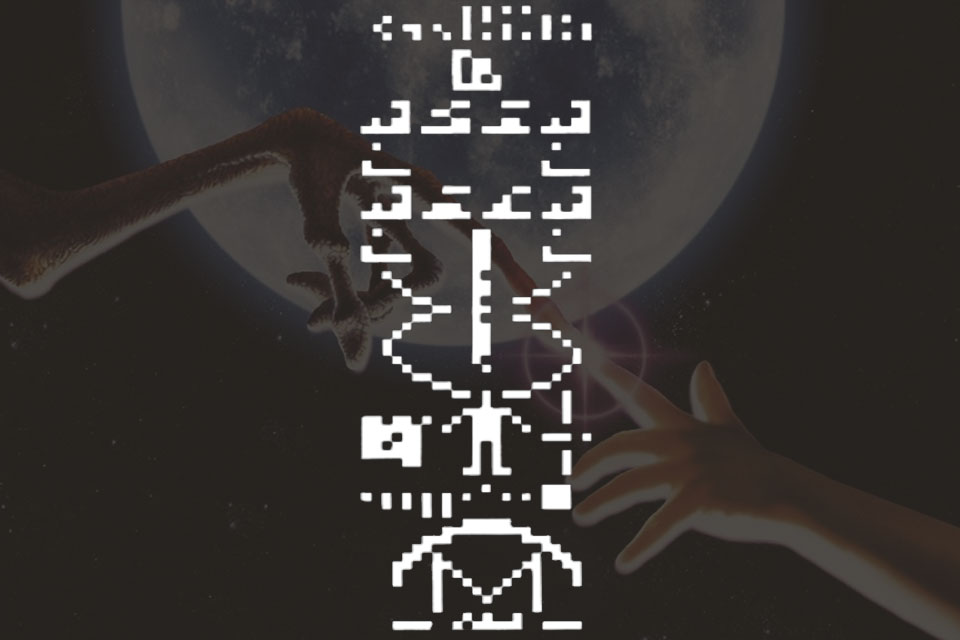

We’re coming up on the 50th anniversary of the Arecibo message – the radio signal we beamed out to Globular Cluster M13 in 1974 which contained 1,679 bits of data communicating some of the most fundamental things about human biology and our knowledge of math and science. To mark this occasion, a group at the Jet Propulsion Laboratory has designed an updated message and would like to beam it out to some other clusters in the hopes of receiving an answer some day (“some day” being most likely defined as 100’s or 1000’s of years from now).

The updated message is spectacular. It condenses a staggering amount of information about us and what we know into a very small package which is then encoded into a visual bitmap which they hope will be universally decodable. (There is much debate about this – the Arecibo message, also a bitmap, was sent to a selection of the smartest people on earth. Only one figured out it was a bitmap and even he couldn’t read it.)

But the contents and format aside, there is also an ongoing debate about whether we should be shouting “here we are” out into the void at all – let alone “here we are and here’s how advanced we are and here are the details of our biology.”

I think most people’s gut reaction to this is, “yeah…that does not seem like a good idea.” But the counter argument goes: “We have already been leaking electromagnetic radiation out into space for 100 years, so we can’t hide ourselves anyway. Might as well be friendly!”

However, at the same time, the researchers promoting METI programs (“Messaging Extraterrestrial Intelligence) point out that to detect that radiation at any interstellar distance from Earth, one would need to build a radio telescope several orders of magnitude larger than any we have built to date. Hence the need to send a focused message. (Of course, more advanced civilizations will have more advanced detection methods, but that only reinforces the lack of necessity for a deliberate message).

This is contradictory. Either we are already detectable and do not need to send a message, or we are not and we do (if we want other intelligences to be aware of us and able to find us).

So let us assume we are not, already, detectable. Then the question of whether to send a message becomes relevant. What surprises me about this debate is how little Game Theory has been applied to the conversation. It seems like precisely the kind of scenario for which Game Theory was invented.

We have two parties, their motivations and desires unknown, and we need to determine the optimal behavior for both so we can determine the optimal behavior for ourselves.

Interestingly, to date, only one major piece of research seems to have been published on the topic. Funnily enough, it was published in 2012 by a Professor just a few minutes from me at the Institute of Science and Technology in Klosterneuburg.

He applies the Prisoner’s Dilemma to the question. To quote from the New Scientist piece about his work referenced in the footnotes: “De Vladar reasoned that the SETI dilemma is essentially the same, but reversed. Mutual silence for prisoners is equivalent to mutual broadcasting for aliens, giving the best results for both civilizations. And while a selfish prisoner rats, a selfish civilization is silent, waiting for someone else to take the risk of waving ‘Over here!’ at the rest of the universe.”

He then goes on to say that the issue with using the Prisoner’s Dilemma is that it is hard to put a value on First Contact. It could be the best thing to ever happen to humanity or the worst. Consequently, depending on where you place this slider, the optimal number of messages to send out into the cosmos also shifts.

However, I wonder if the Prisoner’s Dilemma is the correct model. It seems to me that the better model is the Tragedy of the Commons.

The specifics of the Tragedy of the Commons have been debated for literally centuries, but at its core, the question is: when a community shares a common, renewable resource, what is the optimal strategy for each member of that community to use when exploiting that resource for the long-term?

If you assume there are only two parties exploiting the common resource, it is best in the long term if both parties limit their exploitation. Then in the short term, both parties gain less, but the resource renews itself and can be exploited indefinitely. However, the issue is that in the short term there is tremendous benefit to over-exploiting the resource if the other person is limiting their exploitation.

The math behind this is extremely complex and beyond me, but there is, to me, an obvious common-sense behind it too. Throughout history, we have seen examples of this occur over and over again – from the over-hunting of mammoths 15,000 years ago, to the collapse of various fisheries in the last 50 years, to the current climate crisis. Human beings are very bad at modeling future payoffs versus present payoffs. If you are interested in the math, there is an excellent paper here.

For most of my life, people have called me cynical when I would take the stance that the Tragedy of the Commons is real and our children will suffer the burden of its apotheosis in the collapse of the planet. But I think, today, most people accept that we are staring human history’s starkest example of the Tragedy of the Commons in the face.

In which case, I have to ask: is it really a good idea to alert alien intelligences to our existence? In the end, the resources of the galaxy – certainly of our nearby neighbors – are finite. This might seem like a ridiculous thing to say, the galaxy is incomprehensibly huge, so while its matter is, technically, finite, from a practical perspective, shouldn’t there be “enough to go round” even if there are 100 other intelligent lifeforms out there? Or 1000? Or more? (One of the lowest estimates of the number of earth-like planets in the Milky Way is 300 million!)

But, first of all, this is precisely what people said about Earth only a generation or two ago. Human beings are also particularly bad at comprehending exponential growth, a fact still best demonstrated almost 1000 years later by Ibn Khallikan’s wheat on the chessboard story from 1254 CE (OK, geometric growth, but even better than as an example). If we can’t learn from our recent history exactly how quickly “a-lot-of-resources-but-still-finite” can be used up thanks to the exponential growth of populations, I don’t know what to say.

Secondly, I think a slightly specious argument is relevant (but one that is fun to think about and does give an idea of scale). Assuming you accept the Kardashev Scale and believe that along the progression of civilizations, a major step is harnessing the total power output of your local star with 100% efficiency; and also assuming that one of the most commonly touted ways to do this might be a Dyson Sphere (a shell that completely surrounds that star); you have to consider the fact that if we managed to convert the entire non-solar mass of our system into usable matter to build that Dyson Sphere, we would only have much less than approximately 0.1% of the necessary material.

In other words, as incomprehensibly large as our galaxy may be, even the most back-of-the-envelope calculation on the most basic (if fantastic) of objectives gives some sense that, in fact, the amount of usable resources in the galaxy are finite on that same galactic scale. IE if you assume a civilization is advanced enough to contact us, certainly if they’re advanced enough to reach us, it is much more reasonable to assume they have exponentially greater resource needs than we do to maintain their “standard of living.”

The counter to this argument, of course, runs that if they are that much more advanced, they will be that much more efficient. My answer to this is: well, maybe. But our own history is certainly evidence to the absolute contrary of that statement. That history is a fact, not speculation.

It is also a fact that we are on the brink of environmental collapse thanks to the Tragedy of the Commons operating on a global scale. We have not demonstrated that we are able to think of the communal good in our consumption of resources, even when the communal “other” is literally our own children.

We have not proved ourselves altruistic when it comes to our own species. So I wonder why, on Earth (pun intended), do we assume an alien intelligence would be altruistic?

Note that I’m not suggesting we should be afraid of an alien invasion. That seems far-fetched for a variety of reasons which would require another essay. But I am suggesting that the most likely scenario is that if we do come into contact with an extra-terrestrial civilization, by that point, we will immediately find ourselves competing for resources with them. And by notifying them of our existence early on, we are only encouraging them to step up their exploitation of those resources without “texting us back.”

So I have to say, as excited as I am by the thought of alien intelligences and as extremely unlikely it would be that one of our beamed messages actually intersects an alien civilization capable of processing it, I think we probably shouldn’t be letting the galaxy know we’re here.

After all, if we have been unable to share resources with our fellow man, how can we expect more from intelligences with whom we may share nothing but math?